Featured

-

Meeting (and subverting) college expectations

Editorials featured in the Forum section are solely the opinions of their individual authors. When I was accepted into Carnegie Mellon University, I never got the opportunity to fly out…

-

Thousands gather outdoors to witness rare solar eclipse

By Sam Bates and Arden Ryan Just after 2 p.m. on April 8, the Pittsburgh…

-

A voter’s guide to the upcoming PA primary election

On April 23, Pennsylvania will hold its primary elections. The winners of these races will…

-

Thousands gather outdoors to witness rare solar eclipse

By Sam Bates and Arden Ryan Just after 2 p.m. on April 8, the Pittsburgh sky began to darken, creatures…

-

Students enjoy Carnival despite rainy weather

By Nina McCambridge Last week, tents and rides began going up on the Cut as students lugged wood to Midway.…

-

What I did this weekend

Before you read, look at this and this Calvin and Hobbes strip, they’re loosely the inspiration. I give you the…

-

Buggy Sweepstakes final race results

After a rainy Friday canceled the first day of Buggy rolls, Raceday 2024 started and finished on Saturday, April 13. …

-

‘The Little Mermaid’ review

By Savannah Milam This Carnival weekend, Scotch’n’Soda’s production of Disney’s “The Little Mermaid” took audiences on a journey under the…

-

Softball beats NYU twice in doubleheader

Women’s golf and softball dominated, while other CMU Sports also excelled!

-

Bill Nye the Science Guy visits CMU for Carnival

On Thursday, April 11 at 6:30 p.m., two long lines wound through the Cohon University…

-

The science behind predicting solar and lunar eclipses

When the solar eclipse happened in Pittsburgh last Monday, we knew everything about it: The…

-

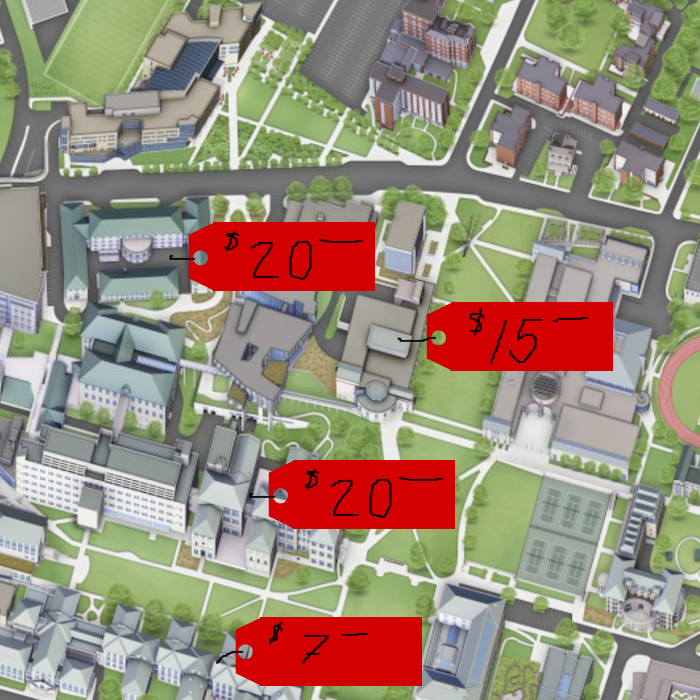

Video game-themed booths line midway, SDC wins best overall

Every year in the week leading up to Carnival, many organizations on campus call all…

-

Thousands gather outdoors to witness rare solar eclipse

By Sam Bates and Arden Ryan Just after 2 p.m. on April 8, the Pittsburgh…

-

A voter’s guide to the upcoming PA primary election

On April 23, Pennsylvania will hold its primary elections. The winners of these races will…

-

CMU holds Spring farmers market

On Tuesday, April 9, the Spring Farmers Market returned to Carnegie Mellon. Vendors at the…

-

Meeting (and subverting) college expectations

Editorials featured in the Forum section are solely the opinions of their individual authors. When…

-

EdBoard: Welcome to the Carnival, folks

By The Tartan Editorial Board Editorials featured in the Forum section are solely the opinions…

-

There’s too much merch!

Editorials featured in the Forum section are solely the opinions of their individual authors. No…

-

Novel-tea: How many chili peppers?

Editorials featured in the Forum section are solely the opinions of their individual authors. I…

-

Robotic Institute researchers showcase projects

On Friday, April 12, the Robotics Institute offered tours of some of its laboratories. First,…

-

Bill Nye the Science Guy visits CMU for Carnival

On Thursday, April 11 at 6:30 p.m., two long lines wound through the Cohon University…

-

The science behind predicting solar and lunar eclipses

When the solar eclipse happened in Pittsburgh last Monday, we knew everything about it: The…

-

The lifespan of game consoles: From birth to obsolescence

On April 8, Nintendo shut down online services for the editions of the 3DS and…

-

Case Watch

BOOOOO SPARTANS

-

NBA Regular season wrap up: surprises

As the NBA regular season wraps up, let’s reflect on some favorite surprises.

-

Foul Play: The NCAA

The University of Connecticut’s sixth men’s basketball national title highlights a tremendous year in NCAA…

-

Buggy Sweepstakes final race results

After a rainy Friday canceled the first day of Buggy rolls, Raceday 2024 started and…

-

What I did this weekend

Before you read, look at this and this Calvin and Hobbes strip, they’re loosely the…

-

Capitalism (advertising) isn’t fun anymore

Commercials aren’t fun anymore. I promise I’m not going to rant about how they put…

-

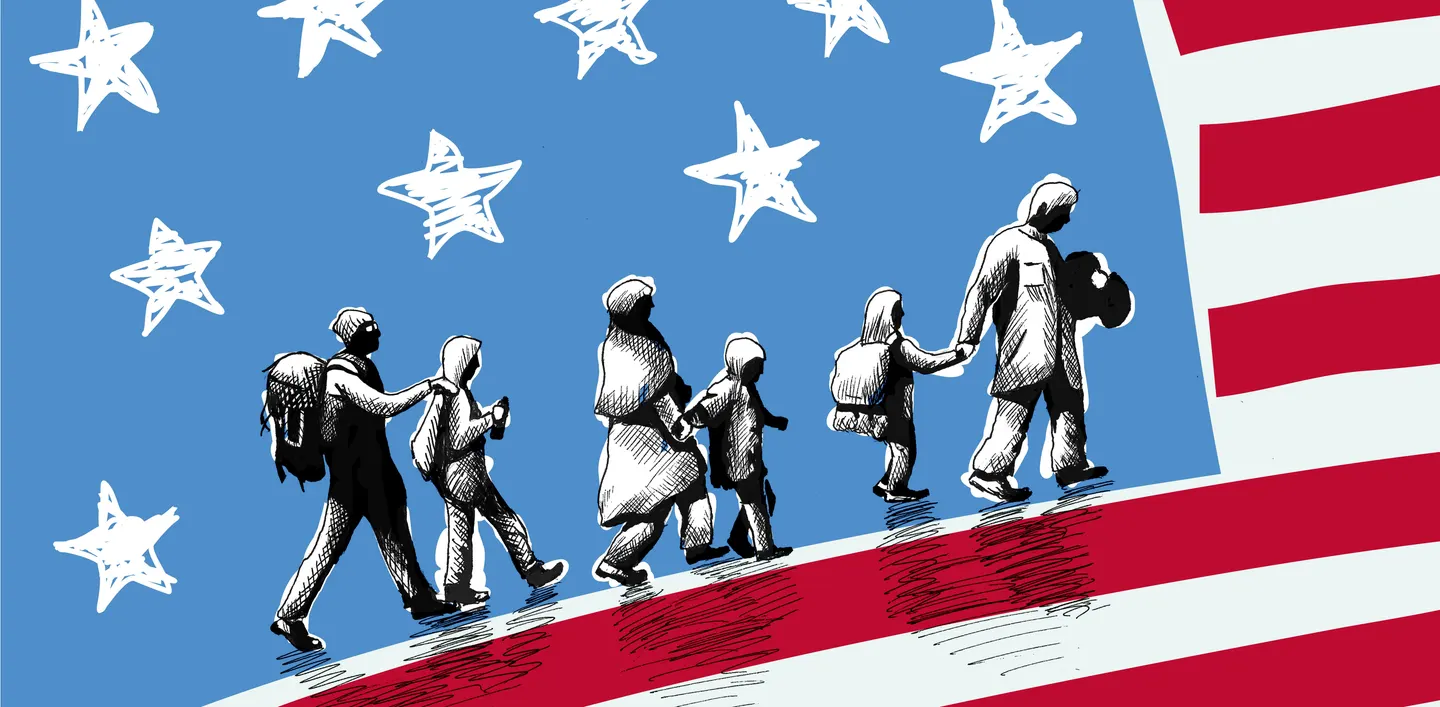

The tired, the poor, the huddled masses yearning to be free

“One star is for Alaska… One star is for Nebraska… One star is North Dakota……

-

Standing on the corner where the sidewalk ends

Have you ever read Shel Silverstein? If you haven’t, I’d recommend sampling a few of…